Finally, Information is the New Oil

Over the past few decades, thought leaders peddled the simplistic idea that data is the new oil. While some leaders transformed industries by harnessing knowledge graphs, the ability of firms to leverage their knowledge and data has been limited. The costs of integration, software development, and human capital transformation have kept data siloed, insights caricatured, and processes sub-optimized.

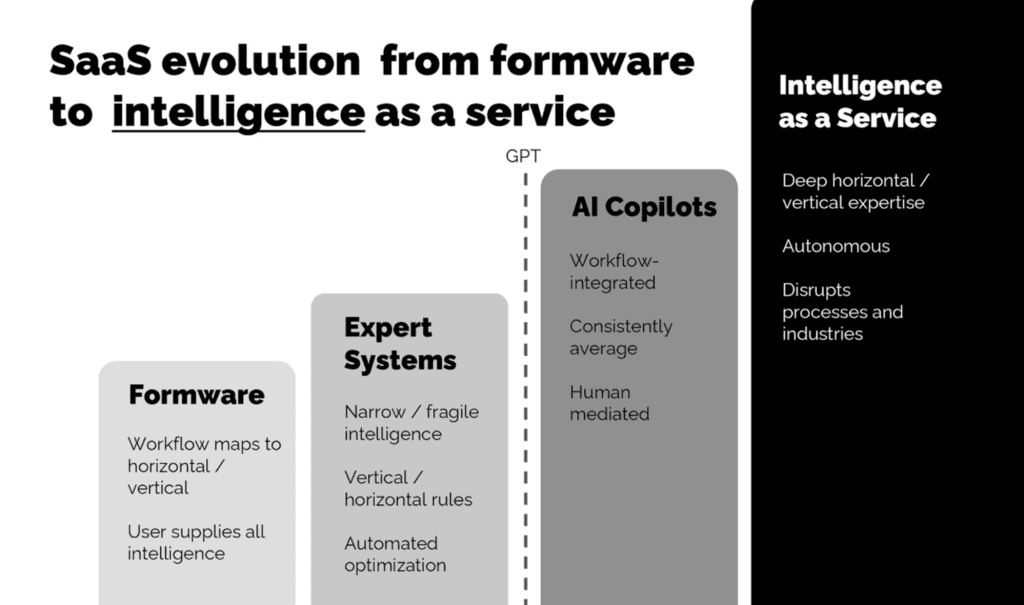

LLMs and their disruption of the SaaS landscape is already underway. We see the next phases of the AI revolution summarized in two distinct stages: Copilots and Intelligence-as-a-Service.

Copilots

These tools will integrate increasingly specialized information and training. However, they require human intermediation as they continue to be wrong often enough to require minding. In many cases, they will be integrated into workflows that benefit from consistently average performance.

Intelligence-as-a-Service

More powerful and autonomous services are on the way. As copilots compete, it is inevitable that the quality and subtlety of the models will eventually stop requiring human minders. We expect that companies that graduate to this more powerful level of intelligence will not only have mastered the best technologies, and have access to vast amounts of compute. They will also have created Data Flywheels. Sustainable access to non-public, hard-to-replicate data will be critical to achieving this level of sophistication.

While it is certainly possible that the models built by the GAI leaders like OpenAI will be able to subsume every area of human expertise, we are not convinced. The physics of the IRL world create powerful asymmetries of expertise in every domain. Unlimited compute does not guarantee unlimited access to every relevant piece of information.

Two Paths up the AI Mountain: Enablers & Data Flywheels

As the world rushes headlong to put a “Copilot on every desk”, there will be many massive winners. We see two categories: Enablers, and Data Flywheels.

Picks and Shovels: The Enablers

Copilots are coming, and so are the players that enable intelligence to be brought to bear on thousands of domains. We have identified five classes of enablers today (detailed below). Our conviction is that the best opportunities for investment here will be tied to AI services ecosystems. We see a coming consulting boom as companies build private, custom copilots.

There will be winners within the host of widget and platform companies that help consultancies like Accenture bring solutions to the enterprise. Companies that help SaaS players integrate AI will also thrive, but we see them being ripe for disintermediation and disruption as leaders and the SaaS players themselves increasingly see AI as a core differentiator to bring in-house.

Data Flywheels

The eventual Intelligence-as-a-Service titans probably don’t look like much today. They may have been late to Generative AI, though most are ahead of the pack. What will set them apart is the ability to:

Get their users to feed them large amounts of non-public data.

Create the right user value to embed themselves into all the right workflows.

Secure massive market share in their niche—be it vertical or horizontal.

Eventually, whether they own their own AI models or not will matter, but we contend that even some players derisively called “GPT Wrappers” will become so entrenched in hard to replicate contextual and business logic data as to build real intelligence moats. We are so early.

Will everyone who weds their future to Llama or OpenAI be making the right call? Time will tell. Our bets will be on teams that can create powerful flywheels and sticky experiences. The ones that drip out the value of AI will become better and better copilots.

The Enablers: Picks & Shovels for the Copilot Gold Rush

Our experience with ChatGPT is instructive. If you write code—a domain it has been intensively trained on using public data—you probably use it all the time. If you don’t you probably find it hard to integrate into your workflow. The reason is simple: It doesn’t know your context.

The Copilot gold rush comes down to creating models that know what you know about your job. Get ready for a million models or model-integrated apps to be created. Getting an AI model to either integrate local information directly (training) or have access to it when answering a question (context & reference) requires Machine Learning Ops (ML Ops). We’ve identified five key enabling tech areas:

Seed Models

From Llama 2 to Hugging Face, there are a growing array of choices when it comes to open- source models. These models then can be specially pre-trained to get closer to the needed starting point.

Example cos: Hugging Face, Facebook.Core Hosting

Spinning up a model to begin to specially train and make available to internal systems requires special hosting services.

Example cos: Hugging Face, AWS, Thoughtworks, PredibaseTraining Ops

Processing and preparing data pipelines used in further tuning/training models. For example extracting tabular data from unstructured or semi-structured sources. Also includes solutions that aim to make it easier to connect the dots between model outputs and training data.

Example cos: Unstructured, Pinecone.ioContext Window and Prompt Automation

From plugins and agents to open technologies such as Langchain, there are an explosion of interesting players that can drive automated interaction with LLMs. Look for tabular and multi-modal solutions here as well.

Example cos: Abacus.ai, Langchain, OpenAIReference Data Preparation

LLMs use vector databases to massively grow the cloud of non-public data they can integrate into their response. These clouds of material that allows LLMs to “search” for answers efficiently in large data spaces.

Example cos: Pinecone.io, Zilliz

There is an explosion of solutions in this area, and this structure won’t likely last long. Our conviction is that there will be more opportunity to capture value by investing in the private LLM ecosystem as opposed to solutions that mainly target SaaS companies and other AI-specialists. We expect that enterprises will heavily invest in consulting services and internal teams devoted to creating private “crown jewel” LLMs.

These Centers of Intelligence within companies will focus on a given problem or department to provide game-changing effectiveness and efficiency for copilots. These engagements will need most or all of the enablers above. It should be noted that even in use cases that rely on public LLMs like GPT 4+ can require significant value from enablers. Our focus is to predict where players like Accenture are headed and invest in enablers best suited to win in that space.

Founder Takeaways – Enablers

We see massive near-term potential in the Private LLM ecosystem. Currently, players getting funded in the enabler spaces tend to target the early adopter marketplace (SaaS/AI teams). Our advice to founders is to look to fit your solution into the service delivery package of large consultancies. This means toughing out the product market fit journey and focusing on service enabled sales as a way to build traction.

It is going to be hard to be product-led in some of these spaces, as players wake up to the opportunity for transformation and massive services sales. Those who stick it out will later be must-buy strategic acquisitions for large, diversified technology players.

Data Flywheels: Future Intelligence as a Service Leaders

With thousands of new AI SaaS companies formed in the last year, and all older firms claiming that they are “powered by AI”, how do founders stand out?

There are broadly two paths to a sustainable edge for early-stage SaaS companies looking for staying power:

Workflow-based data-moats

Job disrupters

Workflow-Based Data Moats

Some successful seed-stage founders in the AI space will follow the ‘tried and true’ SaaS startup approach: disciplined initial niche, insightful tooling workflow, and distribution excellence. To win now, they will also need to integrate AI. Adding AI as a feature or enhancement can work when the solution, and distribution pieces are all there. However, what will drive defensibility long term are data flywheels.

Startups today need to figure out how they “get paid” to amass truly unique dataspaces. The most obvious way to do that organically is to dominate workflows. As their data-space scales, disciplined investment in unlocking the value of the training data starts to compound, furthering their lead and deepening their advantage.

A lot of voices are boldly saying the only winners here will be incumbents. Because AI APIs are available to every player, it seems plausible that SaaS players with entrenched user bases will be able to pain-on AI value and keep their hold on workflows. The key opening for upstarts is to use AI to re-imagine workflows and provide game-changing productivity. That SaaS startup 24 months ahead of you will still struggle to go all in on AI workflows the way you can.

Job Disrupters – Intelligence as a Service

A more risky but potentially powerful strategy is to offer users the ability to hand over partial job functions entirely. When you are aiming to change your buyer’s relationship to a function or sub-function, that goes beyond workflow transformation. In the B2B space, this is riskier in part because it causes buyers to have to take on much more organizational change as part of the adoption process. There will be many startups aiming to replace a given job function/sub-function that will perish because they anticipate what’s required to manage change.

The clear area of focus for these solutions are horizontal rather than vertical. Companies are much less likely to be worried about disrupting jobs that are “non-core”. For some companies that’s administrative functions like HR, legal, accounting, etc.

Founder Takeaways – Data Flywheels

Your guiding light needs to be unfair access to data. Owning models is helpful to your enterprise value, but only so far as the data you’re training it with is unique. Most emerging AI-forward companies will have to compete with agile SaaS companies who know their clients and can add powerful AI models in a couple sprints. Differentiated success will come from companies who boldly reimagine their entire workflow with AI.

New players who aim to go directly for Intelligence-as-a-Service will be freed up to pursue job disruption, while existing players will be more wedded to the standard way of doing things. Founders have to balance boldness with deep customer insight and investment in managing change. Founders that focus on functions with very tight job markets will find the greatest success initially. As much as all organizations want to reduce costs, it is often hard to get the initial business if the cost reduction promise requires a complete rethink of how the unit or function does important work.

THOUGHT LEADERSHIP

© 2024 Vantage Point Inc. All rights reserved.